Variational Bayes and the evidence lower bound

by benmoran

Variational methods for Bayesian inference have been enjoying a renaissance recently in machine learning.

Problem: normalization can be intractable when applying Bayes’ Theorem

Given a likelihood function and a prior distribution that we can evaluate, is the joint likelihood.

The posterior is just where we divide by the evidence

:

However, is frequently intractable. For example, it may not admit a closed form solution, and it is frequently high-dimensional, so even numerical methods like quadrature may not help much.

Here are three techniques we can use to approach it:

- If the posterior

and prior

are of particular forms so that they are conjugate to one another, then the integral will have a simple closed form. Then the updates to the prior from the likelihood are often trivial to calculate.

- It is possible to draw samples from the posterior before we know the normalization factor. Then we can approximate the expectation stochastically by sample averages. By drawing enough samples the expectations converge to the true values – the theory behind MCMC techniques. Two hindrances arise: it is difficult to know how many samples is “enough”; and “enough samples” can also take a long time to generate.

- We can introduce an approximating distribution

. If we can somehow measure the quality of the approximation and iteratively improve it as far as possible, we can use this approximation with confidence. This is the variational approach derived below.

Variational lower bound

Start with the KL from to

, and rearrange to isolate the interesting quantity

:

But doesn’t depend on

so we can pull it out of the expectation:

Rearranging, we get

Because we saw previously that , we have

This quantity is the evidence lower bound (ELBO). It is a functional of the approximating distribution

.

This bound is valuable because it can be calculated without the unknown normalizing constant . However it is equal to

at its maximum, when

, which also implies

.

We have transformed the problem of taking expectations into one of optimization. Now a new question arises – is this problem any easier than the integral we started with? Not necessarily! However we can now make different choices for the form of , so if we can find a family of distributions that is amenable to our available optimization techniques and which also contains a good approximation to the true posterior, we will be happy with the trade-off.

For example, we can rewrite in terms of yet another KL divergence, this time between the approximate posterior

and the prior

on the latent variables:

If and

have the same form – for instance we have chosen them both to be Gaussian – then the second term will have a closed form expression, so if we have a good way to evaluate the first term, as in Kingma & Welling 2013, then we’ll be able to optimize

and solve the problem.

(This bound gets its name from the variational principle, applying the calculus of variations to optimize the function without assuming a particular form. However, we frequently assume a fixed-form approximation using a parametric form of this density. In this case no calculus of variations is required, and the problem reduces to an ordinary optimization.)

Very nicely explained 🙂

in the beginning p(y,z) should be the joint probability not joint likelihood right? or I am mistaken here

Hi Mohamed. You’re right that p(x, y) is the joint probability density. I’m being sloppy to call it this quantity the likelihood when we’re not considering it as a function of the parameters, e.g. .

.

nice post, man

Cool. You say ‘we replace expectations by optimization’. In my own mind, I see it more like ‘replace marginalization/integration, over all z, by expectation’. Thoughts?

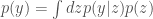

Hi Hugh, you’re right that we could have said “replace integration/marginalization by optimization”. But it’s also true that the quantity we’re trying to calculate is an expectation:![p(y) = \int dz p(y\vert z) p(z) = \mathbb{E}_{p(z)}\left[p(y\vert z)\right]](https://s0.wp.com/latex.php?latex=p%28y%29+%3D+%5Cint+dz+p%28y%5Cvert+z%29+p%28z%29+%3D+%5Cmathbb%7BE%7D_%7Bp%28z%29%7D%5Cleft%5Bp%28y%5Cvert+z%29%5Cright%5D&bg=fff&fg=444444&s=0&c=20201002) . The central problem is always estimating the normalizing constant, but we can choose to solve it in three different ways.

. The central problem is always estimating the normalizing constant, but we can choose to solve it in three different ways.

– Calculate the integral analytically,

analytically, and use empirical averages of the sample to approximate any expectation we want,

and use empirical averages of the sample to approximate any expectation we want, .

.

– Use Monte Carlo to sample from

– Or use variational methods to optimize an approximation

Thank you for the nice post.

I have some minor questions regarding the approximation of the posterior p(y|z).

How do we approximate a conditional probability p(y|z) which should in fact be some function of (y, z) by a single variable function q(z)? I am somewhat confused about this part. Any comments? Thanks!

Hi Daniel. Interesting question!

The *posterior* distribution is a distribution over

is a distribution over  alone, once we have conditioned on the observed data

alone, once we have conditioned on the observed data  . And

. And  is also a distribution over

is also a distribution over  . So after fixing the value of

. So after fixing the value of  , it does make sense to compare these two distributions over

, it does make sense to compare these two distributions over  , and ask about the divergence between them.

, and ask about the divergence between them.

The *joint* distribution on the other hand is a bivariate distribution over all the possible values of

on the other hand is a bivariate distribution over all the possible values of  and

and  together.

together.

Why is the integral intractable p(y).

Actually never mind – solved.

Thank you so much for explaining how $L$ can be rewritten as a KL between approximate posterior and the prior over the latent variables.